30/05/2013

How Vodafone Greece degrades my Internet experience

The title may sound a bit pompous, but please read on and you’ll see how certain decisions can cripple, or totally disrupt modern Internet services and communications as these are offered(?) by Vodafone’s mobile Internet solutions.

== The situation ==

I’ve bought a mobile Internet package from Vodafone Greece in order to be able to have 3G access in places where I don’t have access to wifi or ethernet. I am also using a local caching resolver on my laptop (Debian Linux), running unbound software, to both speed up my connections and to have mandatory DNSSEC validation for all my queries. Many of you might ask why do I need DNSSEC validation of all my queries since only very few domains are currently using DNSSEC, well I don’t have a reply that applies to everyone, let’s just say for now that I like to experiment with new things. After all, this is the only way to learn new things, experiment with them. Let’s not forget though that many TLDs are now signed, so there are definitely a few records to play with. Mandatory DNSSEC validation has led me in the past to identify and investigate a couple other problems, mostly having to do with broken DNSSEC records of various domains and more importantly dig deeper into IPv6 and fragmentation issues of various networks. This last topic is so big that it needs a blog post, or even a series of posts, of it’s own. It’s my job after all to find and solve problems, that’s what system or network administrators do (or should do).

== My setup ==

When you connect your 3G dongle with Vodafone Greece, they sent you 2 DNS servers (two out of 213.249.17.10, 213.249.17.11, 213.249.39.29) through ipcp (ppp). In my setup though, I discard them and I just keep “nameserver 127.0.0.1” in my /etc/resolv.conf in order to use my local unbound. In unbound’s configuration I have set up 2 forwarders for my queries, actually when I know I am inside an IPv6 network I use 4 addresses, 2 IPv4 and 2 IPv6 for the same 2 forwarders. These forwarders are hosted where I work (GRNET NOC) and I have also set them up to do mandatory DNSSEC validation themselves.

So my local resolver, which does DNSSEC validation, is contacting 2 other servers who also do DNSSEC validation. My queries carry the DNS protocol flag that asks for DNSSEC validation and I expect them to validate every response possible.

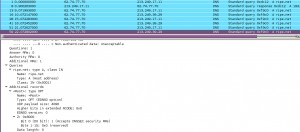

As you can see in the following screenshot, here’s what happens when I want to visit a website. I ask my local caching resolver, and that resolver asks one of it’s forwarders adding the necessary DNSSEC flags in the query.

The response might have the “ad” (authenticated) DNSSEC flag, depending whether the domain I’m visiting is DNSSEC signed or not.

== The problem ==

What I noticed was that using this setup, I couldn’t visit any sites at all when I connected with my 3G dongle on Vodafone’s network. When I changed my /etc/resolv.conf to use Vodafone’s DNS servers directly, everything seemed work as normal, at least for browsing. But then I tried to query for DNSSEC related information on various domains manually using dig, Vodafone’s resolvers never sent me back any DNSSEC related information. Well actually they never sent me back any packet at all when I asked them for DNSSEC data.

Here’s an example of what happens with and without asking for DNSSEC data. The first query is without requesting DNSSEC information and I get a normal reply, but upon asking for the extra DNSSEC data, I get nothing back.

[Screenshot of ripe.net +dnssec query through Vodafone’s servers]

== Experimentation ==

Obviously changing my forwarders configuration in unbound to the Vodafone DNS servers did not work because Vodafone’s DNS servers never send me back any DNSSEC information at all. Since my unbound is trying to do DNSSEC validation of everything, obviously including the root (.) zone, I need to get back packets that contain these records. Else everything fails. I could get unbound working with my previous forwarders or with Vodafone’s servers as forwarders, only by disabling the DNSSEC validation, that is commenting out the auto-trust-anchor-file option.

Then I started doing tests on my original forwarders that I had in my configuration (and are managed by me). I could see that my query packets arrived at the server and the server always sent back the proper replies. But whenever the reply contained DNSSEC data, that packet was not forwarded to my computer through Vodafone’s 3G network.

More tests were to follow and obviously my first choice were Google’s public resolvers, 8.8.8.8 and 8.8.4.4. Surprise, surprise! I could get any DNSSEC related information I wanted. The exact same result I got upon testing with OpenDNS resolvers, 208.67.222.222 and 208.67.220.220. From a list of “fairly known” public DNS servers that I found here, only ScrubIT servers seems to be currently blocked by Vodafone Greece. Comodo DNS, Norton DNS, and public Verizon DNS all work flawlessly.

My last step was to try and get DNSSEC data over tcp instead of udp packets. Surprise, surprise again, well not at all any more… I could get back responses containing the DNSSEC information I wanted.

== Conclusion ==

Vodafone Greece for some strange reason (I have a few ideas, starting with…disabling skype) seems to “dislike” large UDP responses, among which are obviously DNS replies carrying DNSSEC information. These responses can sometimes be even bigger than 1500bytes. My guess is that in order to minimize hassle for their telephone support, they have whitelisted a bunch of “known” DNS servers. Obviously the thought of breaking DNSSEC and every DNSSEC signed domain for their customers hasn’t crossed their minds yet. What I don’t understand though is why their own DNS servers are not whitelisted. Since they trust other organizations’ servers to send big udp packets, why don’t they allow DNSSEC from their own servers? Misconfiguration? Ignorance? On purpose?

The same behavior can (sometimes -> further investigation needed here) be seen while trying to use OpenVPN over udp. Over tcp with the same servers, everything works fine. That reminds me I really need to test ocserv soon…

== Solution ==

I won’t even try to contact Vodafone’s support and try to convince their telephone helpdesk to connect me to one of their network/infrastructure engineers. I think that would be completely futile. If any of you readers though, know anyone working at Vodafone Greece in _any_ technical department, please send them a link to this blog post. You will do a huge favor to all Vodafone Greece mobile Internet users and to the Internet itself.

The Internet is not just for HTTP stuff, many of us use it in various other ways. It is unacceptable for any ISP to block, disrupt, interrupt or get in the middle of such communications.

Each one of us users should be able to use DNSSEC without having to send all our queries to Google, OpenDNS or any other information harvesting organization.

== Downloads ==

I’m uploading some pcaps here for anyone who wants to take a look. Use wireshark/tcpdump to read them.

A. tcpdump querying for a non-DNSSEC signed domain over 3G. One query without asking for DNSSEC and two queries asking for DNSSEC, all queries go to DNS server 194.177.210.10. All queries arrived back. The tcpdump was created on 194.177.210.10.

vf_non-dnssec_domain_query.pcap

B. tcpdump querying for a DNSSEC signed domain over 3G. One query without asking for DNSSEC and three queries asking for DNSSEC, all queries go to DNS server 194.177.210.10. The last three queries never arrived back at my computer. The tcpdump was created on 194.177.210.10.

vf_dnssec_domain_query.pcap

C. tcpdump querying for a DNSSEC signed domain over 3G. One query without asking for DNSSEC and another one asking for DNSSEC, all queries go to DNS Server 8.8.8.8. All queries arrived back. The tcpdump was created on my computer using the PPP interface.

vf_ripe_google_dns.pcap

Filed by kargig at 11:11 under Encryption,Greek,Internet,Linux,Networking,Privacy

Filed by kargig at 11:11 under Encryption,Greek,Internet,Linux,Networking,Privacy

Tags: dig, dns, dnssec, Linux, pcap, tcpdump, unbound, validation, vodafone, Vodafone Greece, wireshark

3 Comments | 24,796 views

3 Comments | 24,796 views